13. Modeling Hands in 3D Made Simple

A gentle introduction to the MANO model

Introduction

If you have ever tried immersing yourself in virtual reality with a Meta Quest headset, you know how cool it is to see your hands come alive in a digital world with smooth, natural movements and realistic skin textures. Behind the scenes, a model captures and renders every subtle detail of hand motion, from a slight bend of a finger to the rotation of the wrist.

Today, we will take a closer look at MANO (hand Model with Articulated and Non-rigid defOrmations) [1], an old but very popular model from the Max Planck Institute for Intelligent Systems. MANO represents human hands in 3D with high fidelity and is widely used in applications such as computer graphics and virtual reality.

The high-level overview

At its core, MANO is deeply human-inspired, built to replicate the anatomy of actual hands. To achieve that, we need to model two separate components:

🦴 The position of the joints (skeleton)

This defines the internal structure and motion of the hand through a set of articulated points, each with a specific space orientation relative to a reference. Together, they form a hierarchical structure that starts at the wrist, moves through the palm, and extends into each finger and its phalanges, just like real bones do. Each joint can rotate freely in 3D, supporting complex gestures and realistic finger articulation.

🖐🏻 The rendering of the surface (mesh)

This part models how the outer surface of the hand changes when the joints move, emulating the role of the skin. Given a hand pose, the outer 3D mesh must deform realistically to simulate the bending of fingers, the stretching and compression of the soft tissues, and the formation of natural creases and folds. On top of these basic pose-dependent deformations, MANO can also account for person-specific anatomical variations, allowing the mesh in a fixed pose to adapt to a wide range of hand types.

The low-level implementation details

Now that we know what we are trying to achieve, let’s see how it’s done in greater depth. Nothing too scary, just some vectors of numbers and an off-the-shelf algorithm!

👋🏻 Hand movements

As shown in the figure below, the MANO model defines 21 keypoints arranged in a hierarchical structure, representing all five fingers plus a root node at the wrist. However, the hand motion is driven solely by the 16 joints containing the root and the internal nodes of the graph, i.e. excluding the 5 fingertips.

Each joint can rotate freely in 3D space around the canonical X, Y, and Z axes using an axis-angle representation. With 48 rotation parameters in total, any hand pose can be defined and animated based on the underlying skeleton.

Since this full, unconstrained space can include all sorts of unnatural or impossible movements, it is common when doing pose fitting to use a few PCA components (typically 6 to 10) learned from a dataset of real hand motions [1]. Each basis vector is a 45-dimensional pose, and any hand pose can be expressed as a combination of these basic elements to make sure it stays close to the manifold of natural hand movements.

⚙️ Skin rendering and deformation

After the skeleton is in position, the next step is to render the 3D mesh of the hand. The mesh consists of 778 vertices and 1538 triangular faces, and each vertex is influenced by one or more joints in the kinematic tree, depending on a set of weights.

MANO uses a common technique in 3D graphics called Linear Blend Skinning (LBS) to deform the reference mesh based on the current pose. While the blending itself is computed using a deterministic algorithm, the mesh can be further personalized using a set of shape parameters, referred to as betas.

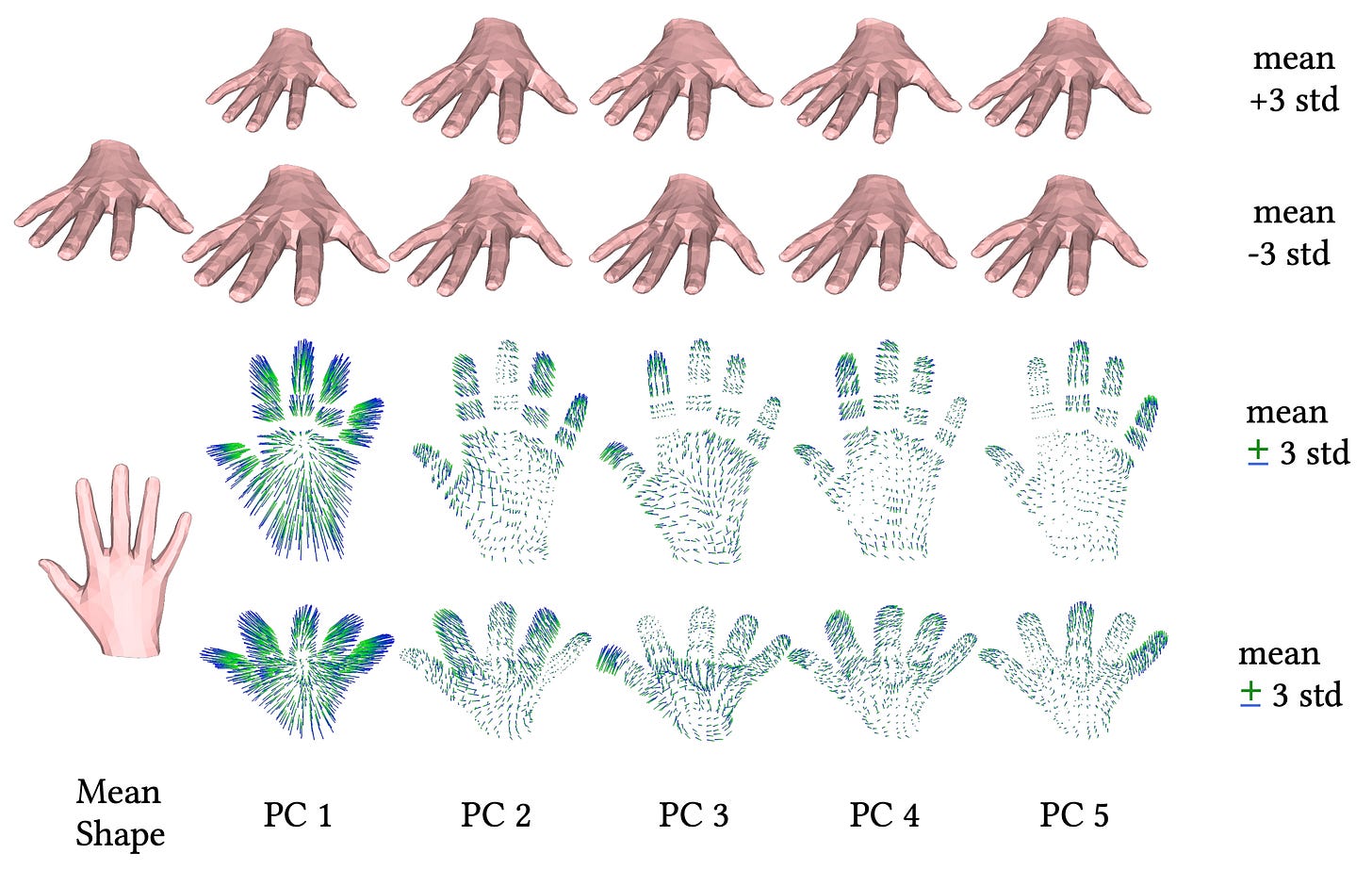

These parameters control high-level individual anatomical features (e.g. finger length, palm width, and overall hand proportions) and are applied to the template mesh before any articulation occurs. Similar to the pose, the shape parameters are learned from real 3D hand scans using Principal Component Analysis (PCA), allowing the model to represent realistic hand variations across different subjects. Typically, MANO uses 10 shape parameters, combining the top 10 PCA components learned from a dataset of approximately 1000 high-resolution 3D scans of 31 subjects [1].

Last but not least, a nice property of MANO is that it is designed to be fully differentiable, enabling optimization and pose fitting through gradient descent. This is essential for tasks such as modeling hand-object interactions, estimating 3D hand poses from RGB images, and learning-based approaches with end-to-end training.

Conclusions

The MANO model provides a compact and expressive way to represent 3D human hand geometry and articulation for tasks such as hand modeling, tracking, and animations. It is a parametric model, requiring pose parameters that control joint rotations and shape parameters that control the appearance.

Of course, rendering hands is just one small part of modeling the full human body. Earlier work (SMPL) explored full-body models with fixed hands [5], and MANO was actually developed as a follow-up. I hope it is not hard to imagine how the same approach works for the full body, including the hands and face. The core idea remains the same, only the number of keypoints and shape parameters increases.

If you’re looking for one of the best hand datasets out there, the ARTIC dataset [6] is a great place to start. Thanks for getting this far, and see you in the next one!

References

[1] Embodied Hands: Modeling and Capturing Hands and Bodies Together

[2] 3D hand reconstruction from a single image based on biomechanical constraints

[3] manotorch: MANO hand model in PyTorch

[4] A PyTorch Implementation of MANO hand model

[5] SMPL: A Skinned Multi-Person Linear Model

[6] ARCTIC: A Dataset for Dexterous Bimanual Hand-Object Manipulation