Introduction

Back in 2015, neural networks had yet to enter the spotlight, but they were already delivering impressive results in image classification and speech recognition. However, despite being based on well-known mathematical methods and having far fewer parameters than today, their inner workings remained difficult to understand.

In an effort to visualize and interpret what happens inside image classification models, researchers at Google ended up with AI-generated art as a surprising byproduct. DeepDream [1], a technique to enhance and amplify the patterns recognized by a network, reveals the intricate and often surreal representations learned by these models while producing dreamlike, psychedelic images. Let’s dive in and see why it’s fascinating in terms of reversing the typical prediction process.

Motivation and challenges

The method is based on convolutional neural networks, a class of deep learning models that process visual data through multiple layers, each capturing different levels of abstraction. As the image moves through these layers, spatial resolution decreases while representational complexity increases.

This means earlier layers might detect simple common patterns like edges and basic textures, while deeper layers combine information to recognize more complex features or entire objects, ultimately determining what the image represents.

The challenge is to understand what exactly happens at each layer. That’s because during training the entire network is optimized end-to-end, with no constraints on how individual layers function or which patterns they should focus on. We just feed an image into the input layer, which then talks to the next one, until eventually the output layer is reached and we get a classification result.

From noise to class representations

A creative way to understand what a neural network has learned is to reverse its usual function. Rather than classifying an image, we ask it to generate one in such a way that a particular interpretation is enforced.

Say you trained a model on multiple classes and want to know what sort of image would result in the classification “Banana”. What you can do is start with an image full of random noise, and gradually tweak it towards what the model considers a banana.

Typically the image input is fixed and we change the weights during training, but here we do the opposite and keep the trained network frozen, tweaking the input such that it is classified in a certain manner. This is possible as we have a classification loss that can be minimized as a function of the input and the frozen model.

By itself, this doesn’t work well because the final image would still look somewhat like noise. It does if we impose a prior constraint that the image should have similar statistics to natural ones, such as neighboring pixels needing to be correlated.

This way, the networks should come up with a class representative that has some structure in it and it’s visually interpretable, so we can gain insight into how it perceives different objects. Kinda cool that a model that was trained to discriminate between different classes is also a capable generator!

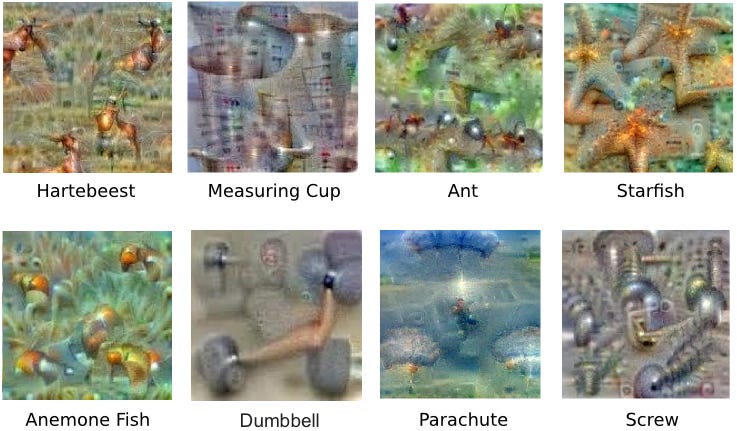

When this procedure is repeated across different classes, ideally it should represent the essence of each one of them, abstracting from the single individual examples and showing the common patterns that are typically used to classify.

Looks good… but is it? Well, it also shows when things are wrong. For example, there is a human in the parachute representation, because the model probably never saw a parachute without a person using it. The same holds for the dumbbell picture, which comes with muscular arms lifting them. In both cases, the network failed to distill the true class concept and relied on shortcuts and proxies at classification time.

The feedback loop of chaos

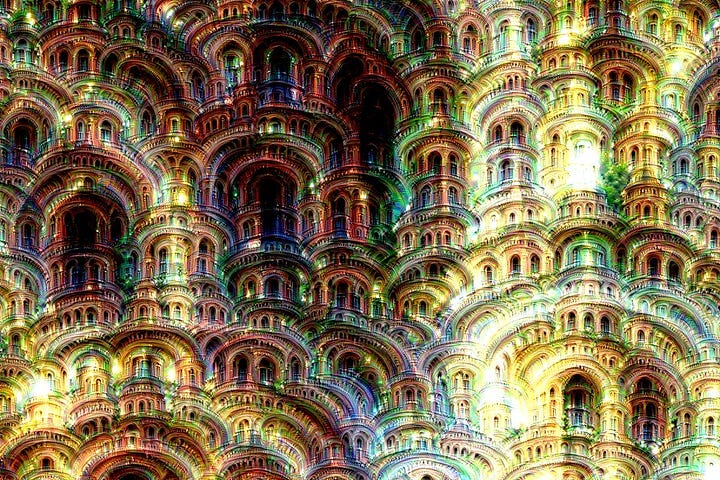

This is already cool by itself, but here comes the crazy part. Given an arbitrary natural image, we can pass it through the network, pick a layer, and ask the model to amplify whatever it sees at that stage. Depending on where the layer is positioned, the complexity of the generated artifacts varies from simple to very detailed patterns.

As before, the network stays frozen all the time. We set up an optimization loop over the input image such that the activations of a particular layer are maximized [2]. At each step, a new version of the image that increasingly excites the activations of that layer is created. After a while, psychedelic patterns emerge seemingly out of nowhere.

The fun part is doing it on images that have nothing to do with the original training dataset. Say you train a model to classify animals (so its features, especially deeper ones, will be specialized at that task) and you feed an image of a cloud. The model will start to force an animal interpretation to the clouds, and the feedback loop on these layers will amplify it until alien animal species appear.

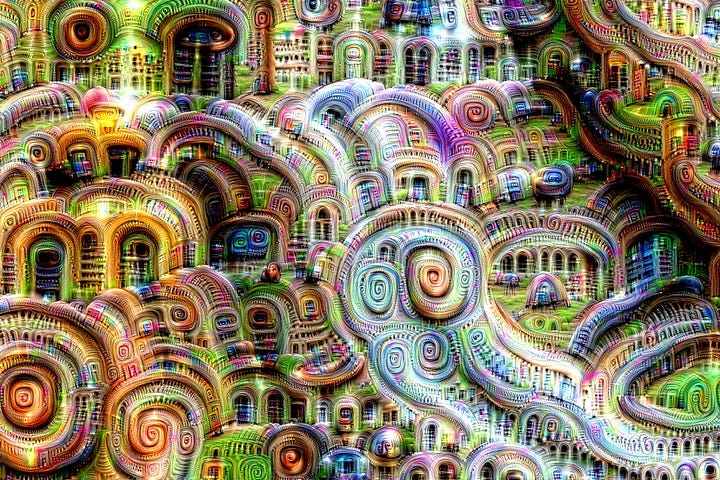

Now, the cherry on top is where the name DeepDream actually comes from. We pick a layer, start with a random image, and iteratively do an optimization pass and some zooming in, entering an endless stream of network consciousness and mixed patterns that supposedly show things the network knows about [3].

Add some ambient music, and the experience becomes even more mesmerizing. There are plenty of videos on YouTube showcasing sequences, and it works incredibly well.

Conclusion

These techniques are very entertaining ways to verify how a model approaches a classification task and can be useful for checking what the network has learned during training, combining deep technical insight with some fun artistic exploration.

But if we leave out the subjective creative part for a moment, what is really important to remember is the trick of input optimization. This shows up in other generative contexts and reverse engineering applications. For example, it can be used to identify which input produces certain outputs or trick a network into misclassifying data.

And that’s it, hope you enjoyed it!

References

[1] Inceptionism: Going Deeper into Neural Networks