Introduction

As discussed in previous posts, efficient self-supervised algorithms are key for pretraining models on vast unlabeled datasets and learning meaningful data representations without explicit supervision. While predicting the next word has been the dominant approach for LLMs for many years, new techniques continue to emerge in computer vision.

Today, we cover BYOL (Bootstrap Your Own Latent) [1], a method that takes up where SimCLR [2] left off, introducing a novel approach that eliminates the need for negative samples while offering a simpler yet effective alternative. If you missed that one or want a refresher on how SimCLR works, you can check it out here, as this article builds upon it.

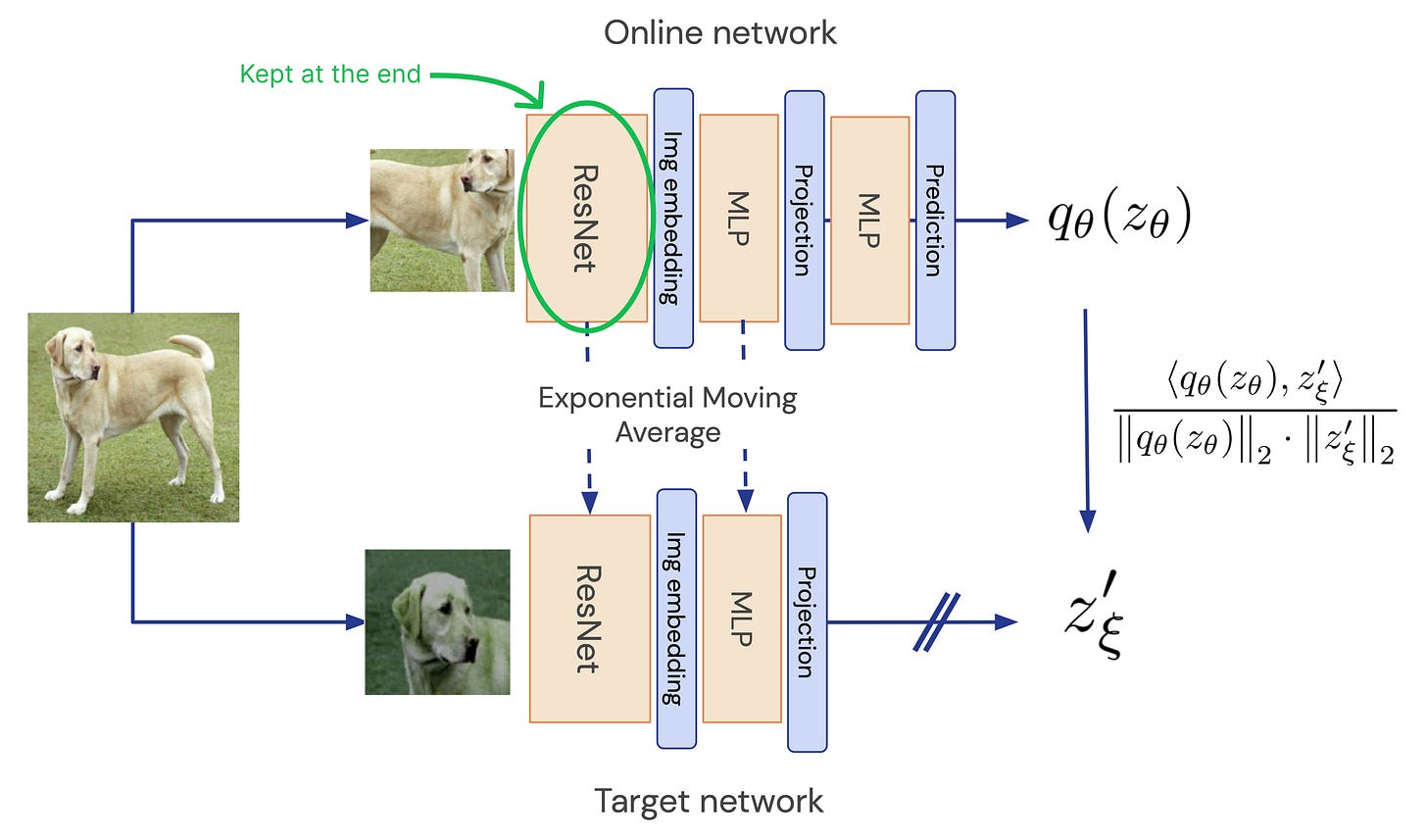

The online and target networks

BYOL introduces an idea that remains a widely adopted technique in self-supervised learning today, and that is having not one but two networks involved in the process:

Online network: actively trained through backpropagation, which is also the one kept at the end of training and used for downstream tasks.

Target network: not directly updated, but follows an exponential moving average (EMA) of the online network’s weights. In other words, the target is a more “stable” version of the online network, slowly adapting to changes.

Like in SimCLR, the networks use an encoder (e.g., ResNet) to extract features from images and a projection head to map these features into a lower-dimensional space. The encoder captures high-level representations useful for downstream tasks, while the projection head — later discarded — is proven to slightly degrade their quality to solve the training task.

However, BYOL introduces an extra prediction head in the online network, making the architecture asymmetric between the two branches. The outputs of both the projection and prediction heads have the same low dimensionality.

Training algorithm

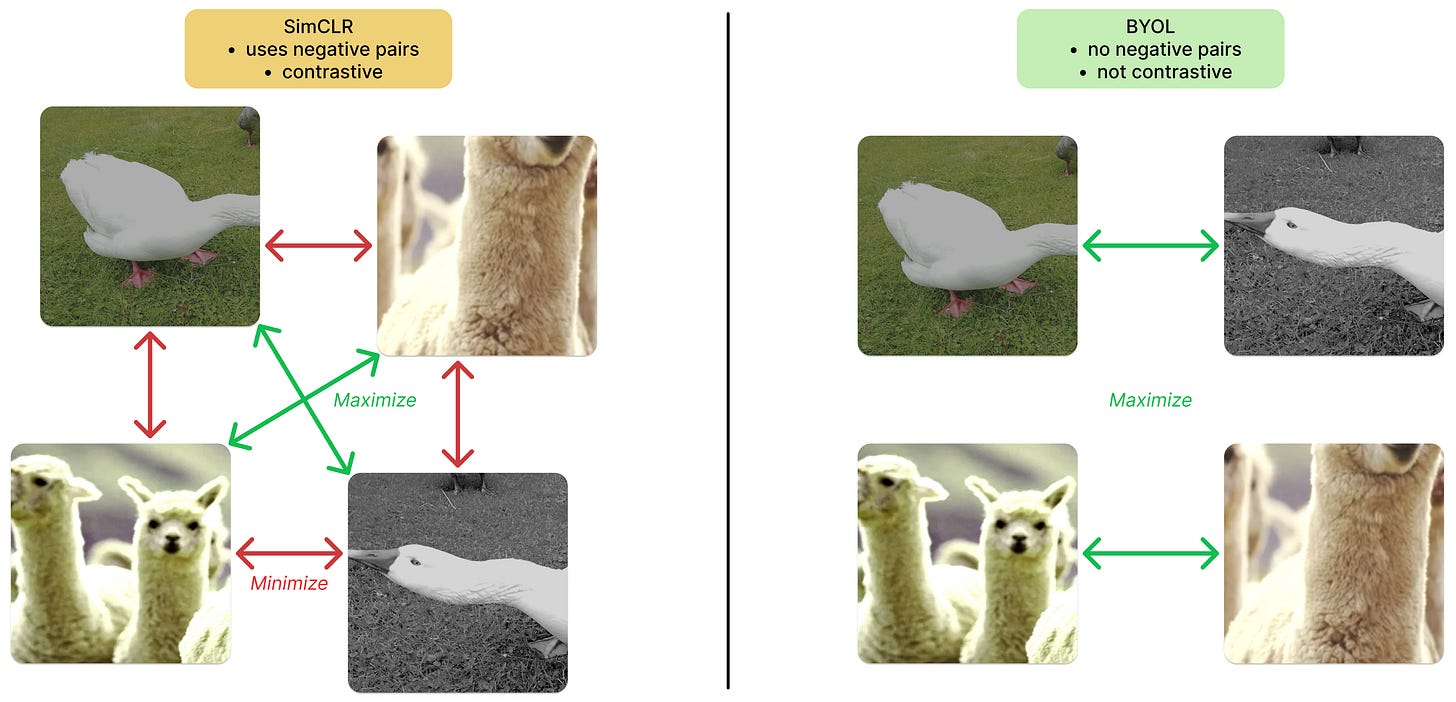

Once again, like in SimCLR, the end goal is to align representations of different augmentations of the same image. However, instead of using a contrastive loss and negative samples, BYOL achieves this by having the online network predict the target network’s representation. This means, no negative samples are required at any stage, and there is no risk of false negatives, i.e. very similar images mistakenly pushed apart in contrastive learning frameworks.

Mean squared error between the two outputs is used as a loss function, but since the feature vectors are normalized at the end, this is equivalent to optimizing cosine similarity. Since the architecture is asymmetric, each pair of images is processed twice, swapping the roles of the online and target networks.

BYOL uses the same set of image augmentations as SimCLR. The formula is a random crop, followed by a random horizontal flip, color distortion (random brightness, contrast, saturation, and hue), and an optional grayscale conversion. Finally, Gaussian blur and solarization are applied to the crops.

Why does BYOL work?

A natural question arises: if negative samples aren't necessary, what prevents the network from collapsing into trivial solutions? Both branches could predict a constant value and the loss would be minimized, but the representations would be completely useless. This issue is known as representation collapse.

BYOL avoids this collapse through its asymmetric architecture with the extra predictor and the EMA update mechanism. First, the EMA update to the target parameters is not in the direction of gradient descent, meaning that the update to the target network, where we copy weights, does not directly minimize the training loss.

Second, assuming the predictor is optimal (i.e. it truly minimizes the expected squared error between prediction and target), it turns out that undesirable equilibria are unstable. Good news, as long as this near-optimality can be enforced.

Key innovations compared to SimCLR

To sum up, the major contributions of BYOL include:

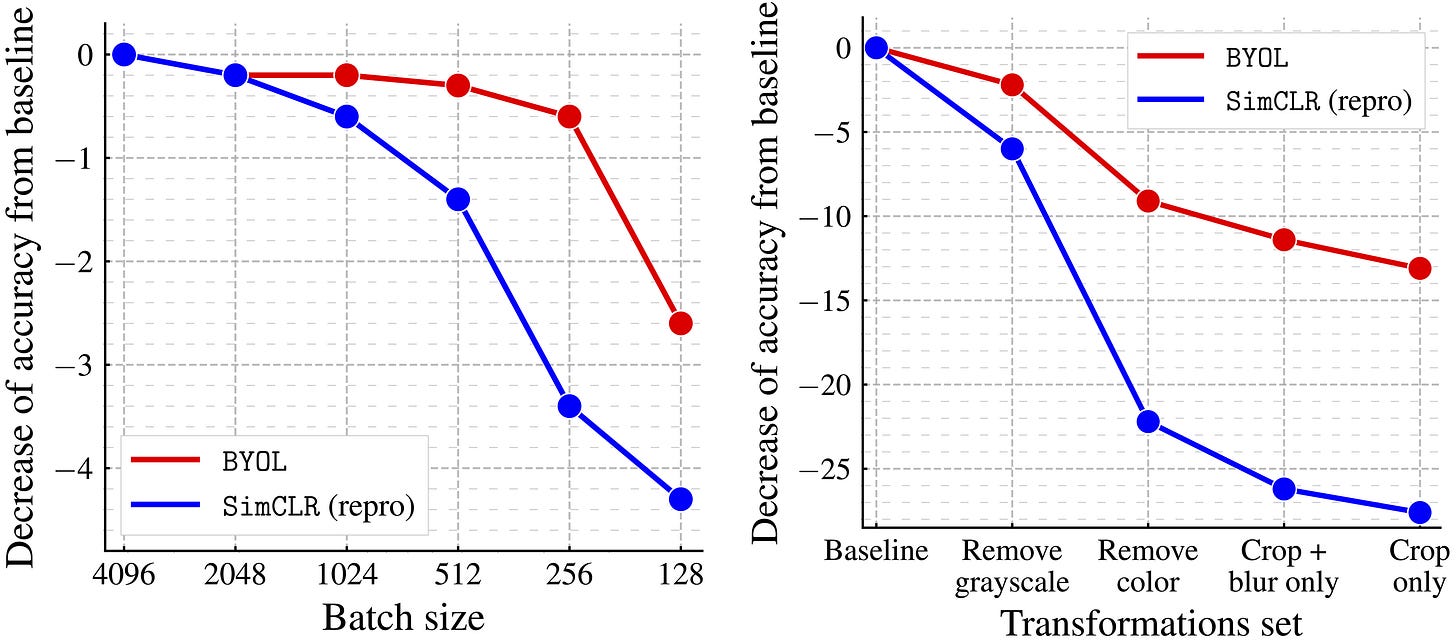

Elimination of negative samples: unlike SimCLR, BYOL completely removes the need for negative pairs, simplifying the training process by avoiding issues like false negatives and the necessity of large batch sizes.

Dual-network setup: BYOL utilizes an online and target network pair with asymmetric parameter updates rather than a single network architecture. The online network is the only one actively trained via backpropagation.

Prediction head: in addition to the projection head introduced in SimCLR, BYOL employs an additional prediction head in the online network. This extra layer enforces asymmetry between the two branches and is key to avoiding collapse.

These design choices make learning representations more robust to choices of augmentations and batch size, elements that were crucial for the success of SimCLR. While color histogram shortcuts might be enough to solve a contrastive task, BYOL is incentivized to keep additional global information in its online network to improve its predictions of the target representation, making it far less sensitive to the removal of color distortion.

Conclusions

BYOL represents a change of direction in self-supervised learning, switching from contrastive to a self-distillation paradigm and asymmetric architectures. This shift has inspired many follow-up works and is still prevalent today since it reduces overall complexity.

While highly effective, BYOL still has some limitations, including sensitivity to hyperparameters like the EMA decay rate, dependence on strong augmentations, and somewhat reduced interpretability compared to contrastive methods. Also, the concurrent emergence of vision transformers (yes, BYOL is still in the pre-ViT era) has opened new possibilities, which future works have explored.

Stay tuned to learn more about these!

References

[1] Bootstrap Your Own Latent A New Approach to Self-Supervised Learning

[2] A Simple Framework for Contrastive Learning of Visual Representations