Introduction

Open-source Stable Diffusion and FLUX models have made high-quality image generation accessible to anyone. However, as general-purpose foundation models, they are not tailored to individual subjects or specific domains. In other words, they excel at generalization but not specialization.

Today, in the first article of our monthly series on Stable Diffusion personalization, we see how Low-Rank Adaptation (LoRA), a popular method in the NLP community, can also be used to adapt generative models on a single GPU without retraining them from scratch or fine-tuning all parameters.

Motivation

Low-Rank Adaptation was originally proposed in 2021 by Microsoft [1] as an efficient method for adapting LLMs to downstream tasks by training only a small number of parameters. This line of work makes sense because:

As pretrained models continue to scale, full fine-tuning becomes increasingly impractical. Just a single backward pass can easily eat up all GPU memory.

Tuning all the weights is often overkill and may lead to catastrophic forgetting, where the model loses important knowledge from its pretraining.

A few years later, we can confidently measure its enormous impact on democratizing AI, especially as the model got larger. LoRA is one of these elegant ideas that are impossible to unsee, and allows regular users to fine-tune powerful models on hardware such as the free GPUs available on Google Colab or Kaggle.

What is it all about? In simple words, approximating the changes introduced by new data to large (typically square) weight matrices using the product of two much smaller, low-rank matrices (rectangular, for simplicity). This linear algebra trick leads to massive savings in terms of memory and compute during training, and zero added latency at inference time. Sounds ideal, and for once, it actually is!

Understanding LoRA

A neural network typically boils down to a sequence of matrix multiplications, with linear layers serving as the building blocks of most architectures. This is especially true for Transformers, which have a deeply linear structure as explained in post #3, given that MLPs and attention layers are linear operations or a composition of.

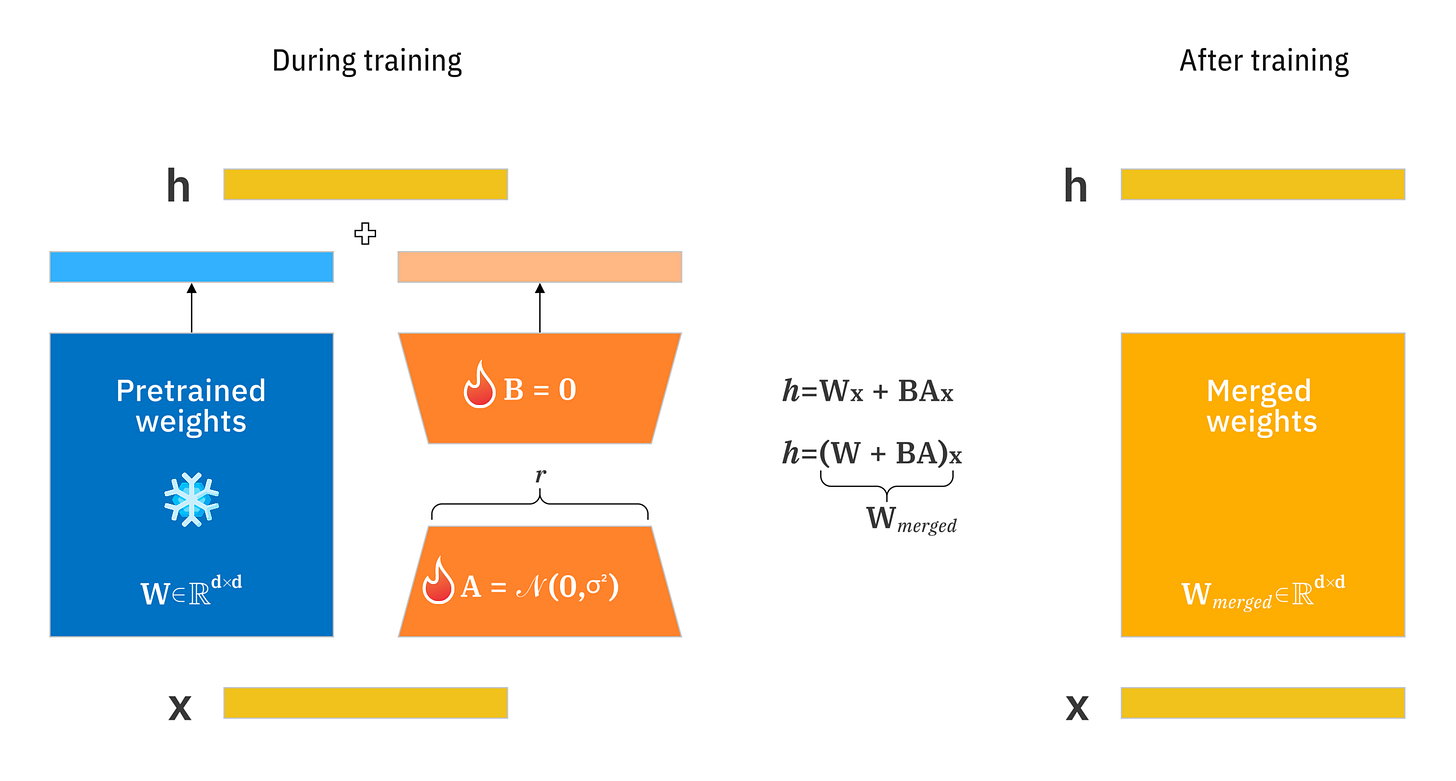

The idea is that during fine-tuning, each generic weight matrix W is updated based on gradients from new batches of data. After aggregating all the updates, the matrix W would effectively be turned into a new matrix W + ΔW, with ΔW having the same dimensionality as the matrix W.

Now, this update doesn’t strictly need to modify the original weight matrix in place! Instead, it can be viewed as pairing the original matrix W, which remains intact, with a second matrix that captures the change, and summing both contributions in the forward pass.

The genius idea of LoRA is to introduce two small, low-rank matrices A and B such that their product BA approximates ΔW and matches the shape of W. The fact that these matrices are detached and significantly smaller (e.g., W might be 1024×1024, but A and B can be 4×1024 and 1024×4, respectively) serves three main purposes:

Efficient training. During training, only a tiny number of parameters need to be updated compared to modifying the matrix W in place. In the example above, it’s 8×1024 parameters vs. 1024×1024. That is massive and allows for significant model customization in hours or even minutes, instead of days.

Minimal deviation from the prior. Fine-tuning is often done on a much smaller dataset compared to pretraining, and there is probably not even enough data to achieve good convergence in the matrix ΔW. The low rank of LoRA acts as a regularizer and prevents the catastrophic forgetting problem through separate weights with just enough capacity to properly train A and B.

Efficient serving. A single frozen pre-trained model can be shared by many LoRA modules. We can switch models by replacing the matrices A and B, reducing the storage requirement and task-switching overhead. If we want a single model, then we can just merge the original W and LoRA matrices into one via a simple element-wise sum, resulting in zero additional overhead!

LoRA in Stable Diffusion

The denoising latent model underlying SD-like architectures, whether based on the classic U-Net (as in SD v1.x and v2.x) or newer backbones like DiT [2] (used in SD3.x and FLUX), is packed with self-attention and cross-attention layers.

These attention mechanisms rely on linear projections to compute queries, keys, and values. That is exactly where LoRA acts by injecting the small trainable matrices into these linear projections without touching the original weights. In practice, LoRA is typically applied to the query and value matrices in:

Cross-attention layers connecting the denoising model to the text encoder. This allows the model to personalize how it interprets prompt-conditioned features and to assign an “identity” to a particular prompt.

Self-attention layers within the denoiser, relating image features across spatial positions. This unlocks personalizing the style and what it can actually generate, if it was not already in the pretraining data.

There is typically no reason to adjust the VAE responsible for compressing and decompressing images, since that works well across almost all natural images out of the box. This means that all it takes to fine-tune Stable Diffusion on custom subjects or domains like your dog, a product line, or a visual style is to slightly modify the latent denoising model.

Since we are updating a number of parameters often less than 1% of the model, this makes training feasible even on a single consumer-grade GPU in a matter of hours. The Diffusers library streamlines the LoRA fine-tuning process by providing built-in scripts [3] that take care of all you need under the hood. The only thing left for you is creating a dataset to train on!

Conclusions

LoRA also opens the door to personalizing large diffusion models, helping creators and researchers to experiment efficiently. To get an idea of the impact of significantly lowering computational barriers, have a look at this plot referenced by Andrej Karpathy at the YC AI Startup School a couple of weeks ago [4].

This shows the equivalent of GitHub in the AI era, where “personalized” model weights on Hugging Face are branching from the base model like commits in a code repo. When this was shown on stage, I was genuinely blown away: the big blob is the set of LoRA weights of the recent FLUX.1-dev model, and the second one is Stable Diffusion XL. This means custom image generators are super popular!

Next week, we will further specialize in model personalization with DreamBooth, an approach designed for deep identity customization with minimal training data, with as few as 5–10 images of a subject. The good news is that LoRA addresses a complementary problem, which is memory saving, and therefore can be used in conjunction to further enhance the efficiency. See you next week!

References

[1] LoRA: Low-Rank Adaptation of Large Language Models

[2] Scalable Diffusion Models with Transformers