Introduction

Breaking the usual flow with something different today. I wasn’t sure whether to post, but then I recalled a fun thread and figured it was worth sharing, even briefly.

This is not a paper, but rather a Hacker News [1] and Reddit [2] discussion (yes, these are the places where genuine findings are shared XD) that went viral back in February 2024. I vividly remember coming across it a few months later while reading related work for my master’s thesis at ETH, and spending hours going down the rabbit hole just because it sounded so cool.

The claim is that the VAE used in Stable Diffusion 1.x and 2.x is fundamentally flawed, and that instead of distributing information evenly, certain latent dimensions end up carrying far more global information than others. Yet, since it was trained on billions of images and because deep learning is remarkably good at compensating for flaws, it still works just fine in practice. As one user memorably put it, “Backprop doesn’t give a s**t”, and that's just hilariously true. So, what’s going on?

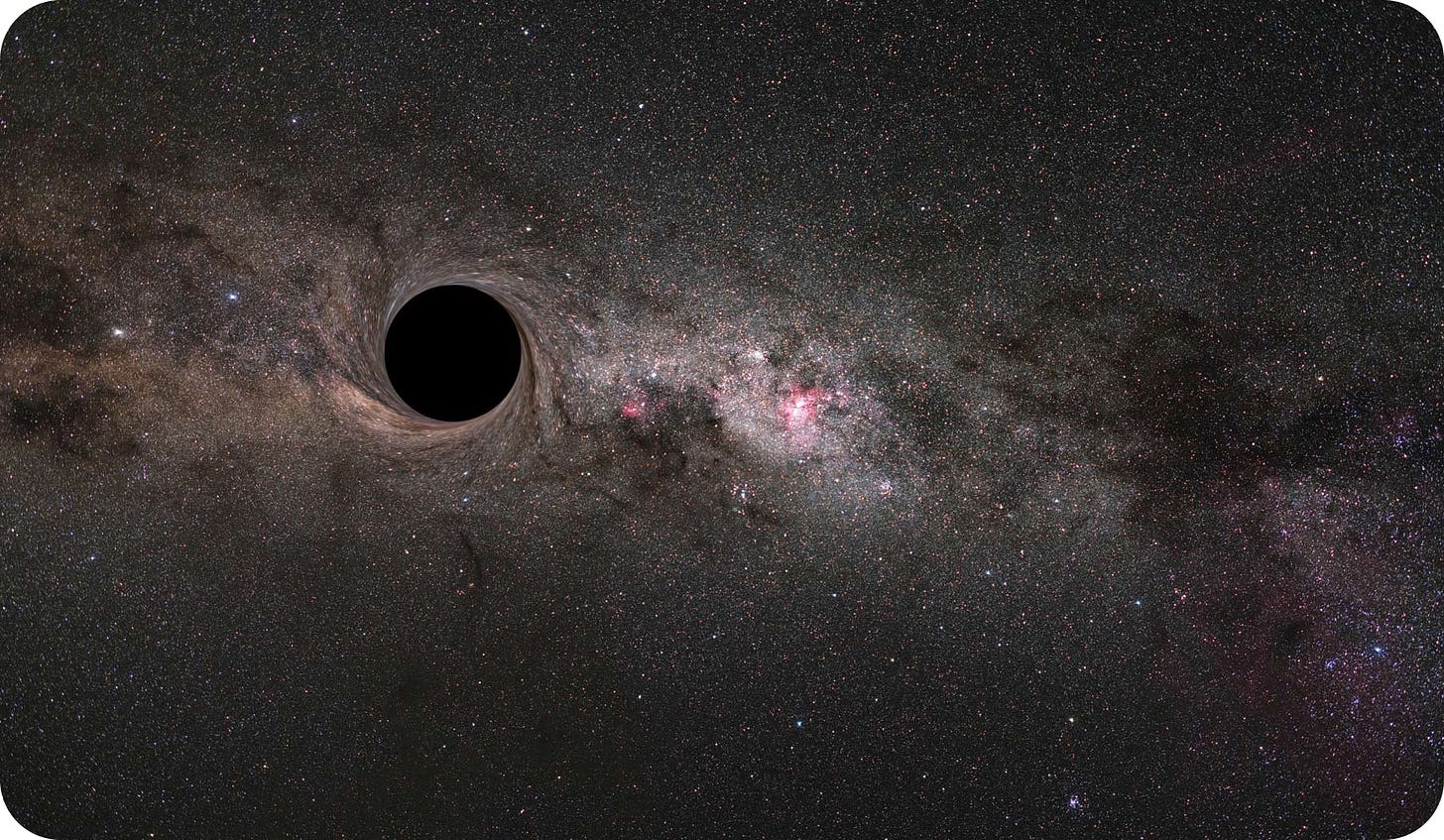

A black hole in latent space

Long story short, Stable Diffusion uses latent diffusion to generate images, which means it does not generate directly in pixel space, but rather in the compressed latent space of a Variational Autoencoder (VAE). This VAE is first trained separately on the task of image compression and reconstruction, then kept frozen and used to encode images during training and decode the latents produced by the diffusion model back into pixels at inference time. More about VAEs here:

But like any deep learning model, the VAE behaves a bit like a black box. And while it learns to compress and reconstruct images faithfully for Stable Diffusion, strange artifacts emerge in its latent space, and this is where the “black hole” issue comes in.

The whole question can be summarized as: for any image, there is a spot in latent space (which, remember, is a tensor of size H/8 × W/8 × 4) where the VAE is trying to smuggle global information about the image, instead of distributing it equally across the whole representation as it is supposed to do.

This is exactly the situation that the KL-divergence term in the VAE loss is supposed to prevent, enforcing that the latent space follows a smooth, uniform Gaussian distribution instead of collapsing information into single points!

Given that, if we modify that special point in the latent, we end up affecting the entire image when decoded, because the spot carries global information. On the flip side, changing any other location in the latent grid only has a local effect, tweaking a local patch of texture or minor details rather than the whole picture.

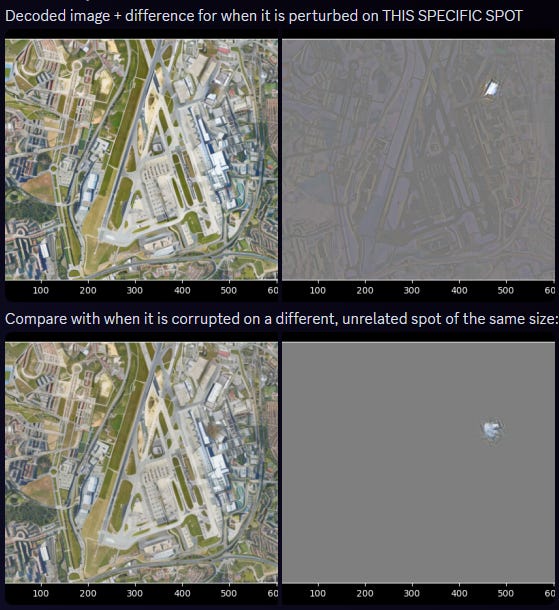

This can be seen in the experiments carried out by the author of the post on this aerial image, where overwriting just a tiny 3×3 region around the “black hole” causes global changes across the entire decoded image. In contrast, perturbing a 3×3 region anywhere else in the latent grid only leads to small, localized changes.

The bottom case is what we would normally expect from a convolutional VAE, where locality dominates, but the use of self-attention in Stable Diffusion’s VAE makes it possible for global information to route into one concentrated spot. In general, points that are close in latent space should correspond to points that are close in pixel space.

Practical implications

Well… basically none, as demonstrated by Stable Diffusion models working very well! In fact, the VAE encodes and decodes images perfectly fine, and any generative model trained on that latent space will adapt to this “singularity” and eventually compensate for the uneven distribution of information.

This entire thing is a matter of efficiency, because the Stable Diffusion denoising model built on top of this latent space could, in theory, be more effective if it didn’t have to work around the flaw. Instead of spending capacity correcting for the black hole (i.e., waste effort routing global context around through local interactions), it could use that power to generate sharper, cleaner, or more consistent images.

The black hole is also visible in the generated images. Because these are the rules the denoiser has to play by, it naturally learns to construct latents that smuggle global information too. This is next-level “overfitting” to an abstract concept, pretty cool!

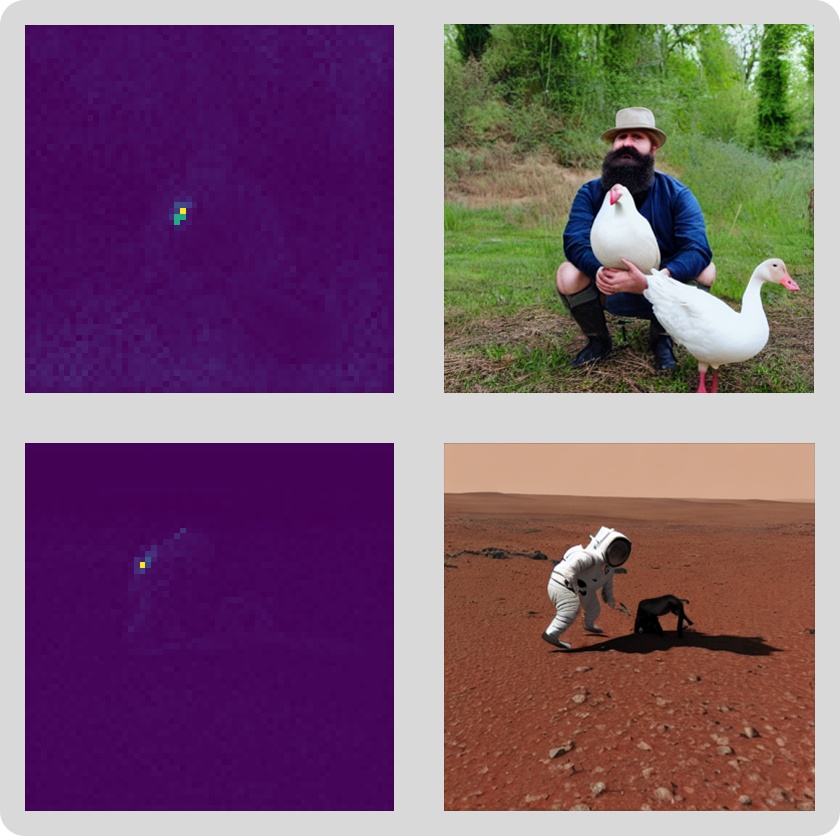

This visualization was made by zeroing out each possible spatial location in two generated 64×64×4 latents, which would give the images on the right when decoded. For every location, the pixel intensity represents the reconstruction error between the original image and the perturbed one. Bright regions mark positions where zeroing out at that spot across all 4 latent channels causes large changes in the image, i.e., clear evidence of the diffusion model smuggling global information there.

Before proceeding, just take a moment to appreciate how far image generation has come from a couple of years ago! These images look so ugly.

The cause

This “black hole” issue likely comes from how the VAE was trained. In theory, the KL-divergence term in the VAE loss should penalize any attempt to concentrate too much global information in a single spot, forcing the latent space to stay smooth, uniform, and Gaussian-like.

In practice, it seems this KL term was weighted very lightly to prioritize much-needed reconstruction quality. The unintended side effect is that the model learns a shortcut for some unknown reason, smuggling global information through one concentrated region of the latent instead of spreading it evenly across all locations.

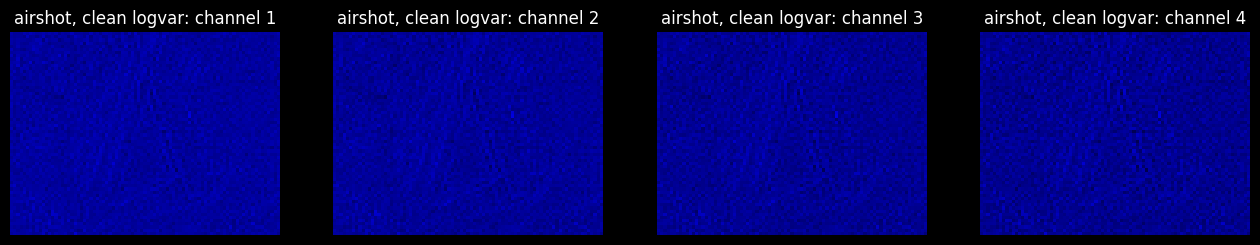

This anomaly clearly shows up in the log variance (logvar) maps displayed above, where one pixel region has abnormal values compared to the rest of the latent grid. Remember, the VAE outputs a distribution via mean and logvar for each latent location, although in practice we always use just the mean as a representation.

In contrast, the logvar plots for a healthy VAE (such as the one used in Stable Diffusion XL) would not show that anomalous spot when encoding the same aerial image. And this more balanced behavior holds in general.

Conclusion

Diffusion models are powerful, but only as good as the VAE that comes before them. If the VAE doesn’t provide a clean, well-structured latent space, the diffusion process has to waste effort compensating instead of pushing image quality further. As we saw above, the generative model learns to create images that also contain latent artifacts.

That’s it, nothing groundbreaking or a critical flaw that prevents the model from working, but really just a fun fact reminding us of how hard things are to control, and that backpropagation trains in an asymmetric way without caring. See you next week for the ultimate DINO series deep dive, subscribe not to miss it!

References

[1] Hacker News discussion “Stable Diffusion’s VAE is flawed”

[2] Reddit post “The VAE used for Stable Diffusion 1.x/2.x and other models (KL-F8) has a critical flaw, probably due to bad training, that is holding back all models that use it”

very interesting fact, i didn't know this!! great read.

one question i have where i am confused: the 2x2 plot with the duck and moon walking shows the reconstruction error on the left after zeroing out each channel in the latent space.

however, if i understand correctly, we zero out the values in the 64x64x4 latent, so each channel gets zeroed out in the 64x64 "image". how does this then map to the 512x512 error map? is is just regular matplotlib upscaling? or is there some other mapping happening? i didn't check reddit/hackernews, was just wondering if you know more by any chance! or did i miss something crucial?